This post is a lightly edited version of the talk I gave during the 2022 “3 Minute Thesis” competition at Queen’s University.

When we think about artificial intelligence, we often think that the artificial part is what will be challenging. After all, in engineering we want to build solutions that in some sense contain the problem they are trying to solve. And we know that it takes a lot of effort to build something as capable as Deep Blue or Watson.

But the real difficulty is figuring what we mean by intelligence. And that’s important, because as a society we are building systems that are supposed to be intelligent. Companies are selling us everything from self-driving cars to home-care robots and these AI systems are supposed to give us super-human performance in the real-world. But how can we decide if we are ready to accept these AI systems into our lives? That’s where my research comes in, because if we want to build intelligent systems we should imitate the most successful intelligent system we know: the human brain.

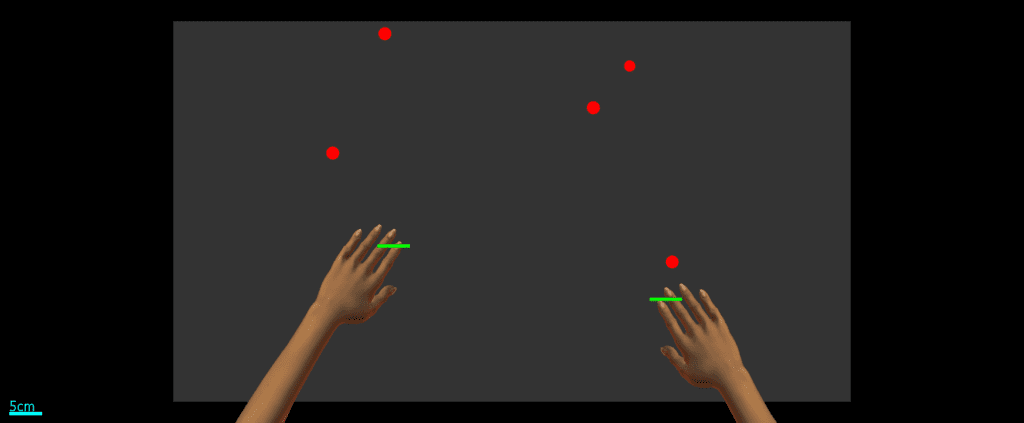

I started my research by asking, how do humans decide to move? Movements are great for analysing this kind of question because humans have evolved to move around in our environment and because we can connect our movements back to the original decisions that led to them. In our task, we asked human participants to hit as many falling objects as they could in a virtual workspace in order to learn how they decided which object to hit with which paddle. It turns out this is a tough question to answer, because this task quickly becomes overwhelming. Which means that people cut corners.

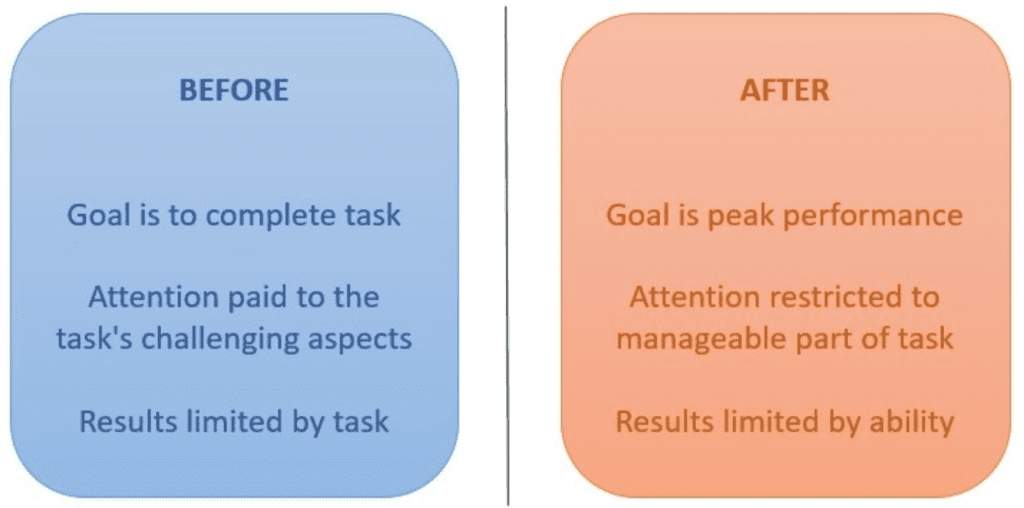

What I mean is, there are a lot of conflicting processes in this task: perception, decision-making, and movement all have to happen quickly. And while this makes it hard for us as experimenters to figure out what’s happening specifically, this actually shed light on something very important. As the task’s difficulty increased, people had to focus their attention to the parts of the task they were struggling with. But beyond a certain point, people stopped trying to hit all of the objects and focused their attention on what they could achieve. So we see that as intelligent agents, humans find a way to make an overwhelmingly complex world manageable. And this makes sense, because our goal in life isn’t to be perfect. Our goal is to survive.

So the big lesson from my research is that human attention is an important characteristic of human intelligence. As humans we have to be adaptable to keep up in a changing and challenging world, and so will AI systems. So maybe when we are building AI systems we should take a cue from human intelligence.

Maybe instead of thinking about optimality, we should think about adaptability.

And maybe instead of trying to build AI systems that are perfect, we should ask how do we build AI systems that are adaptable enough to be ready for real life?